Tensor Flow

Please feel free to contact us

Go

Tensor Flow is an all-encompassing open-source Machine Learning (ML) platform. It empowers developers in creating applications involving Deep Learning, besides being crucial for training and inferential analysis of Deep Neural Networks. It is a comprehensive and flexible system constituted of tools, libraries, and community-based resources.

Tensor Flow has the capacity to handle vast amounts of data through its higher dimension and multi-dimensional arrays called Tensors. Data Flow Graphs enable distributed code execution across a cluster of systems.

You can subscribe Nagios Core to an AWS Marketplace product and launch an instance from the Nagios Core product’s AMI using the Amazon EC2 launch wizard.

Usage / Deployment Instructions

To access the application:

Step 1: ssh into EC2 machine using putty or terminal with public ip and the key that you have generated.

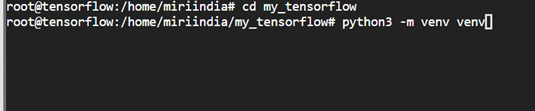

Step 2: Creating a Virtual Environment

$ mkdir my_tensorflow

$ cd my_tensorflow

Step 3: python3 -m venv venv

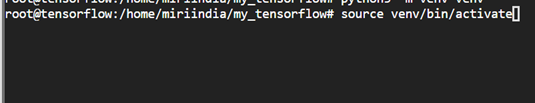

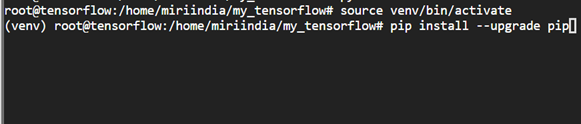

Step 4: $ source venv/bin/activate

Step 5: $ pip install --upgrade pip

Step 6: $ pip install --upgrade tensorflow

![]()

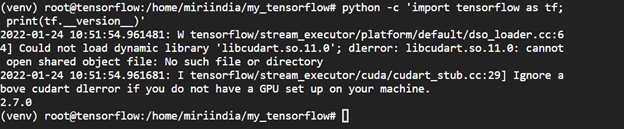

Step 7: $ python -c 'import tensorflow as tf; print(tf.__version__)'

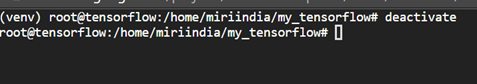

Step 8: $ deactivate

All your queries are important to us. Please feel free to connect.

24X7 support provided for all the customers.

We are happy to help you.

Submit your Query: https://miritech.com/contact-us/

Contact Numbers:

Contact E-mail:

The Apache Hadoop software library allows for the distributed processing of large data sets across clusters of computers using a simple programming model. The software library is designed to scale from single servers to thousands of machines; each server using local computation and storage. Instead of relying on hardware to deliver high-availability, the library itself handles failures at the application layer. As a result, the impact of failures is minimized by delivering a highly-available service on top of a cluster of computers.

Hadoop, as a scalable system for parallel data processing, is useful for analyzing large data sets. Examples are search algorithms, market risk analysis, data mining on online retail data, and analytics on user behavior data.

Add the words “information security” (or “cybersecurity” if you like) before the term “data sets” in the definition above. Security and IT operations tools spit out an avalanche of data like logs, events, packets, flow data, asset data, configuration data, and assortment of other things on a daily basis. Security professionals need to be able to access and analyze this data in real-time in order to mitigate risk, detect incidents, and respond to breaches. These tasks have come to the point where they are “difficult to process using on-hand data management tools or traditional (security) data processing applications.”

The Hadoop JDBC driver can be used to pull data out of Hadoop and then use the DataDirect JDBC Driver to bulk load the data into Oracle, DB2, SQL Server, Sybase, and other relational databases.

Front-end use of AI technologies to enable Intelligent Assistants for customer care is certainly key, but there are many other applications. One that I think is particularly interesting is the application of AI to directly support — rather than replace — contact center agents. Technologies such as natural language understanding and speech recognition can be used live during a customer service interaction with a human agent to look up relevant information and make suggestions about how to respond. AI technologies also have an important role in analytics. They can be used to provide an overview of activities within a call center, in addition to providing valuable business insights from customer activity.

There are many machine learning algorithms in use today, but the most popular ones are:

Tensor Flow is usable with Python, Javascript, Java, and C++.

It supports both CPUs and GPUs. GPUs are especially handy in developing deep learning applications.

Developers can choose to deploy models across a variety of platforms such as servers, cloud, mobile, and edge devices, browsers, and a range of Javascript platforms.

The flexible architecture of Tensor Flow makes the development and publication of complex models easy. From conceptualization to execution!

Tensor Flow

Tensor Flow  linux

linux  Python

Python